Last week, in a conversation with one of my non-techy friends, I said something along the lines of machine learning being an integral part of our everyday lives and it occurred to me he didn’t really understand what I was saying. Machine learning, Artificial intelligence, computer vision, deep learning, etc all sounded the same to him.

And this is a problem amongst many of my friends and acquaintances as well. Some of them don’t even want to delve into the tech world because they’re not well versed in tech terminology.

And, honestly, that is a very easy problem to fix. Which is why today’s article is your AI buzzwords 101. Today we’re going to demystify the meaning behind those words and how they are integrated into our everyday lives, gain some intuition about some of these buzzwords and know how they relate to us digitally.

Checkout our Bangla online courses on Programming and Web Development (full stack); with lots of practice problems, solutions and projects.

Artificial Intelligence

Let’s start with the most popular one – Artificial Intelligence.

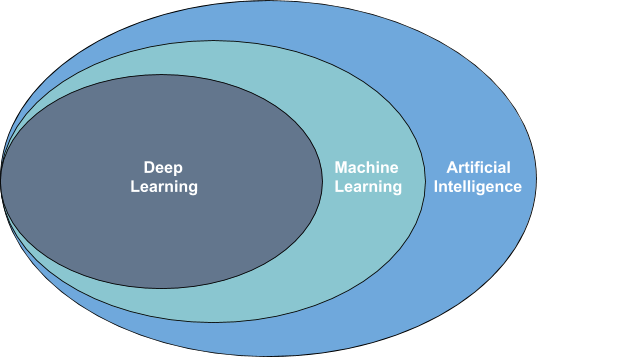

Artificial Intelligence is a subfield of computer science. It directly studies the techniques and strategies that are used to solve problems in a more human-like approach.

Up until the era of big data, AI was limited in what it could do, because it was written in a way to solve a very specific problem of a very specific domain. In spite of the limitation, those techniques are still in use today because they provide efficient solutions to lots of problems such as searching problems and efficient path finding.

One interesting use case of classical artificial intelligence algorithms is in game playing. We have seen the likes of deep blue beating the best of the best chess champions in the past. Those programs are also called game playing agents and use clever tricks to foresee some of the moves of players. This enables the agent to make the most optimal move and thus beating human players in sophisticated games like chess.

AI has changed its course dramatically. With the advent of the big data era, we now have access to more than enough data that we can hope for. Nowadays, rather than solving a specific problem with a predefined rule, it hopped on the train where it can leverage the huge amount of data to learn complex patterns so that it can be used in a more general way. This is where the subfields of AI such as machine learning, computer vision, etc come into play. Let’s take a closer look at those fields.

Machine Learning

Machine Learning is pretty self-explanatory. It is in the name. If I was told to explain this in a single line, it’s simply a way of making “machines learn”.

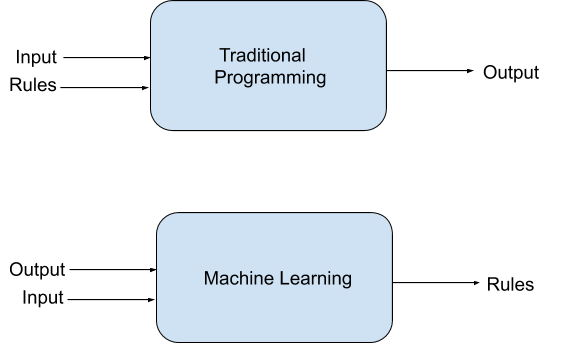

It can be considered as a subfield of artificial intelligence. In normal computer programs, we craft all the rules of the program explicitly. After that, we give a certain input to the program to get an output. But for the case of machine learning, this approach is a bit different and so it can be treated as a different paradigm of programming a computer.

Instead of explicitly stating the rules, we give the program the inputs, the outputs and it learns the rules by itself! This is analogous to teaching a child how to read and write.

Impressive right?

Machine learning is everywhere in our digital products because a lot of things can not be explicitly programmed. Self-driving cars, search engines, photo editing and taking, facial recognition, chatbots, document searching, email spam filtering everywhere we see the use of this paradigm.

One specific use case of machine learning that I can mention that’s used by almost all of us is getting personalized recommendations. We get recommendations based on our activities on applications and websites such as YouTube, Amazon and Netflix. This is just one of the hundreds of applications that I can think of and it would be too exhaustive even just to name them all!

It’s a broad field that is primarily a hybrid of mathematics, computer science, and programming that has been rapidly accelerating ever since the boom of big data.

Deep Learning

Deep learning is a subfield of Machine learning that explicitly uses a particular type of mathematical model to perform the task of machine learning. Vaguely speaking, these models try to mimic the learning process of the human brain in general. That’s why they are termed as “Neural Networks”.

Though the system is not as similar to how the brain learns, it’s safe to say that this is inspired by our biology of learning.

Nonetheless, deep learning approaches are currently the best way to teach a machine. They are great at learning complex patterns in data. For instance, you can create a program with neural networks that can transfer the style of an artwork to a photo, as if the photo was painted by the artist themselves! Or you can use it to detect if there is any object in a picture, and if there is any you can detect whether it’s a certain object or not and even generate a human-like caption for the picture.

Use cases for this subfield are just too large to state all at once. A lot of machine learning and pattern recognition related tasks are done through this specific approach. It is used for space exploration and satellite image processing, for discovering novel drugs and their use cases, for generating parts of sophisticated aircraft and spacecraft, for making smarter robots that can adapt to changing environments, for processing complex patterns on social networks, and for guiding self-driving cars.

Practically the application is endless and everyday novel applications are being discovered.

Computer Vision

Computer Vision is a field of study where we program computers to interpret the visual world around us.

We have already talked about machine learning and deep learning, and these approaches are heavily used in this field.

Take a simple example of identifying a cute kitten in a picture. You want your computer to classify a picture as a cat or not a cat. Now if you were to write a program to classify cats trivially, then think about the number of cases that you would have to handle. You have to consider the shape, size, and color of the cat. Not to mention the variability in these cases. Then there is the aspect of the position, the orientation of the cat, whether the picture was taken from above or below, etc. So long story short, you would just go mad trying to even attempt a handwritten classifier, and there would always be some astray anomalies that make your program go obsolete.

So the current solution is to use those learning techniques I already mentioned above to craft such a sophisticated program.

Computer vision has much more applications in our digital life. It’s not limited to classifying or detecting objects but also involves how the computers see and manipulate images. It’s used as eyes of a self driving car, to tag people in a picture, for assisting medical professionals to identify diseases, in industrial sectors for quality control and defect detection, in security and surveillance for threat detection, and much much more. It’s even being used to generate pictures and videos and recently it’s being applied to detect, analyze and generate 3-dimensional images and models. The field is moving at a very fast pace and new discoveries are being made every day.

Natural Language Processing (NLP)

NLP stands for Natural Language processing. It’s the subfield of computer science that combines linguistics with computer science. The gist is to make computers understand and generate human-like responses so that our day to day lives can be a little bit easier.

We deal with text-based data in all aspects of our lives. You are currently reading this article and this is an example of text-based data. What if you had thousands and thousands of articles and you were told to find the important entities such as names of persons, places, and dates from all of the articles? You were also told to group the huge number of articles by topics.

For example articles 1 and 2 talk about technology, article 3 talks about sports, and so on. This is a herculean task by itself and would take weeks if not months to complete. That’s where natural language processing comes to play. Using clever statistical and machine learning algorithms, computers can observe patterns within texts to classify them, group them, and even recognize interesting entities. Besides, this field focuses on understanding natural languages and generating appropriate responses such as we see in chatbots and next-word prediction in Gmail and search queries.

Just like computer vision, this field is moving at a very fast pace and new discoveries are made every single day.

Final Words

We started off slow with just five of the most commonly used tech jargons amongst many many others but we do plan on bringing you many more in the future. So, I hope this was helpful to someone and the best advice I have for you is that you only need to be enthusiastic and have fun while learning and the fancy lingo will soon follow!